Berikut ini akan kita bahas solusi untuk masalah “Non-system keyspaces don’t have the same replication settings, effective ownership information is meaningless” dari Cassandra. Apakah permasalahan ini perlu di fix atau tidak tergantung dari design infrastucture yang anda miliki, tetapi pada umumnya harus di fix.

Datacenter: datacenter1 ======================= Status=Up/Down |/ State=Normal/Leaving/Joining/Moving -- Address Load Tokens Owns Host ID Rack UN 192.168.88.29 247.05 MiB 16 ? 68ba4bca-7eef-4583-9fbe-b0f7851f126a rack1 UN 192.168.88.34 223.11 MiB 16 ? 53325ce7-52c7-433e-8ff1-8630d698a439 rack1 UN 192.168.88.31 218.39 MiB 16 ? c9e9d227-1d8d-4206-9f5b-bb684eb442e6 rack1 Note: Non-system keyspaces don't have the same replication settings, effective ownership information is meaningless

Informasi yang sama akan muncul bila anda menjalankan perintah nodetool describecluster

Solusi: Fix Replication Factor

Informasi tersebut muncul karena replication_factor tidak sama dengan jumlah node yang aktif, pada kasus ini saya memiliki 3 node, tetapi keyspace menggunakan replication factor 2.

Contoh bila saya melihat status keyspace ecommerce

nodetool status ecommerce Datacenter: datacenter1 ======================= Status=Up/Down |/ State=Normal/Leaving/Joining/Moving -- Address Load Tokens Owns (effective) Host ID Rack UN 192.168.88.29 247.05 MiB 16 100.0% 68ba4bca-7eef-4583-9fbe-b0f7851f126a rack1 UN 192.168.88.34 223.11 MiB 16 100.0% 53325ce7-52c7-433e-8ff1-8630d698a439 rack1 UN 192.168.88.31 218.39 MiB 16 100.0% c9e9d227-1d8d-4206-9f5b-bb684eb442e6 rack1

tidak muncul, karena keyspace ecommerce menggunakan replication factor 3.

Cara untuk melihat replication factor dari masing-masing keyspace, login sebagai admin Cassandra

cqlsh -u USER -p PASSWORD IP-SERVER-CASSANDRA

cassandra@cqlsh> SELECT * FROM system_schema.keyspaces; keyspace_name | durable_writes | replication --------------------+----------------+------------------------------------------------------------------------------------- ecommerce | True | {'class': 'org.apache.cassandra.locator.SimpleStrategy', 'replication_factor': '3'} system_auth | True | {'class': 'org.apache.cassandra.locator.SimpleStrategy', 'replication_factor': '1'} system_schema | True | {'class': 'org.apache.cassandra.locator.LocalStrategy'} system_distributed | True | {'class': 'org.apache.cassandra.locator.SimpleStrategy', 'replication_factor': '3'} system | True | {'class': 'org.apache.cassandra.locator.LocalStrategy'} commerces | True | {'class': 'org.apache.cassandra.locator.SimpleStrategy', 'replication_factor': '2'} system_traces | True | {'class': 'org.apache.cassandra.locator.SimpleStrategy', 'replication_factor': '2'} data | True | {'class': 'org.apache.cassandra.locator.SimpleStrategy', 'replication_factor': '3'} (8 rows)

lihat pada bagian replication_factor, diluar system keyspace Cassandra, keyspace commerces memiliki replication_factor 2. Cek dengan nodetool

nodetool status commerces Datacenter: datacenter1 ======================= Status=Up/Down |/ State=Normal/Leaving/Joining/Moving -- Address Load Tokens Owns (effective) Host ID Rack UN 192.168.88.29 247.05 MiB 16 76.0% 68ba4bca-7eef-4583-9fbe-b0f7851f126a rack1 UN 192.168.88.34 223.11 MiB 16 64.7% 53325ce7-52c7-433e-8ff1-8630d698a439 rack1 UN 192.168.88.31 218.39 MiB 16 59.3% c9e9d227-1d8d-4206-9f5b-bb684eb442e6 rack1

bila dilihat dari output diatas kolom Owns tidak 100%. Solusinya update replication_factor keyspace commerces. Dari cqlsh, jalankan

ALTER KEYSPACE KEYSPACE WITH REPLICATION = { 'class' : 'SimpleStrategy', 'replication_factor' : NUMBER }; # contoh ALTER KEYSPACE "commerces" WITH REPLICATION = { 'class' : 'SimpleStrategy', 'replication_factor' : 3 };

informasi yang sama bisa dilihat menggunakan nodetool describecluster, tetapi hanya berlaku untuk Cassandra v5

$ nodetool describecluster

Cluster Information:

Name: cassandra-stg-01

Snitch: org.apache.cassandra.locator.SimpleSnitch

DynamicEndPointSnitch: enabled

Partitioner: org.apache.cassandra.dht.Murmur3Partitioner

Schema versions:

e2da5d42-7b0f-3a46-9bbd-d8184662bf8b: [192.168.88.34, 192.168.88.29, 192.168.88.31]

Stats for all nodes:

Live: 3

Joining: 0

Moving: 0

Leaving: 0

Unreachable: 0

Data Centers:

datacenter1 #Nodes: 3 #Down: 0

Database versions:

5.0.4: [192.168.88.34:7000, 192.168.88.29:7000, 192.168.88.31:7000]

Keyspaces:

system_auth -> Replication class: SimpleStrategy {replication_factor=1}

system_distributed -> Replication class: SimpleStrategy {replication_factor=3}

system_traces -> Replication class: SimpleStrategy {replication_factor=2}

ecommerce -> Replication class: SimpleStrategy {replication_factor=3}

data -> Replication class: SimpleStrategy {replication_factor=3}

commerces -> Replication class: SimpleStrategy {replication_factor=3}

system_schema -> Replication class: LocalStrategy {}

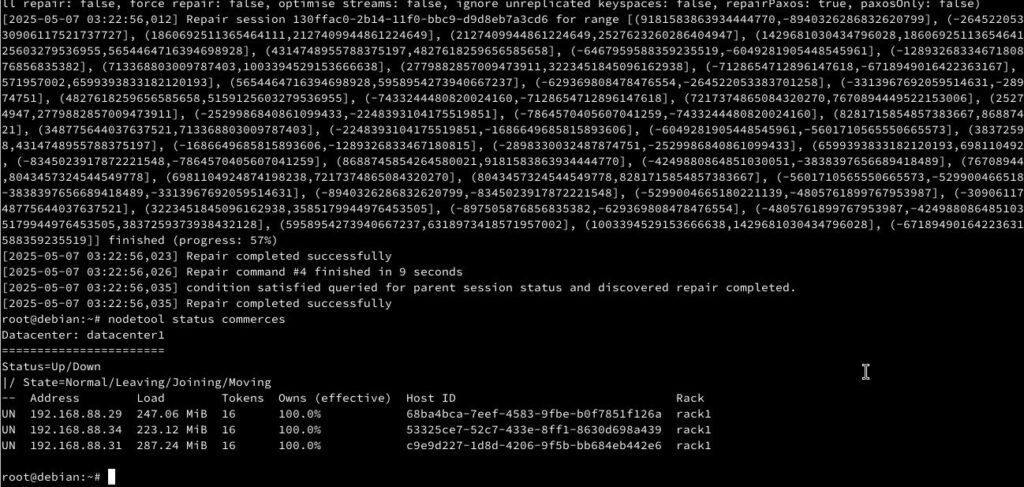

system -> Replication class: LocalStrategy {}terakhir jalankan nodetool repair --full, untuk sync data antar node. Lamanya proses ini bergantung dari jumlah data yang di sync, pada kasus ini 200-an MB hanya membutuhkan waktu 9 detik. Setelah selesai di repair jalankan kembali nodetool status dan nodetool status commerces